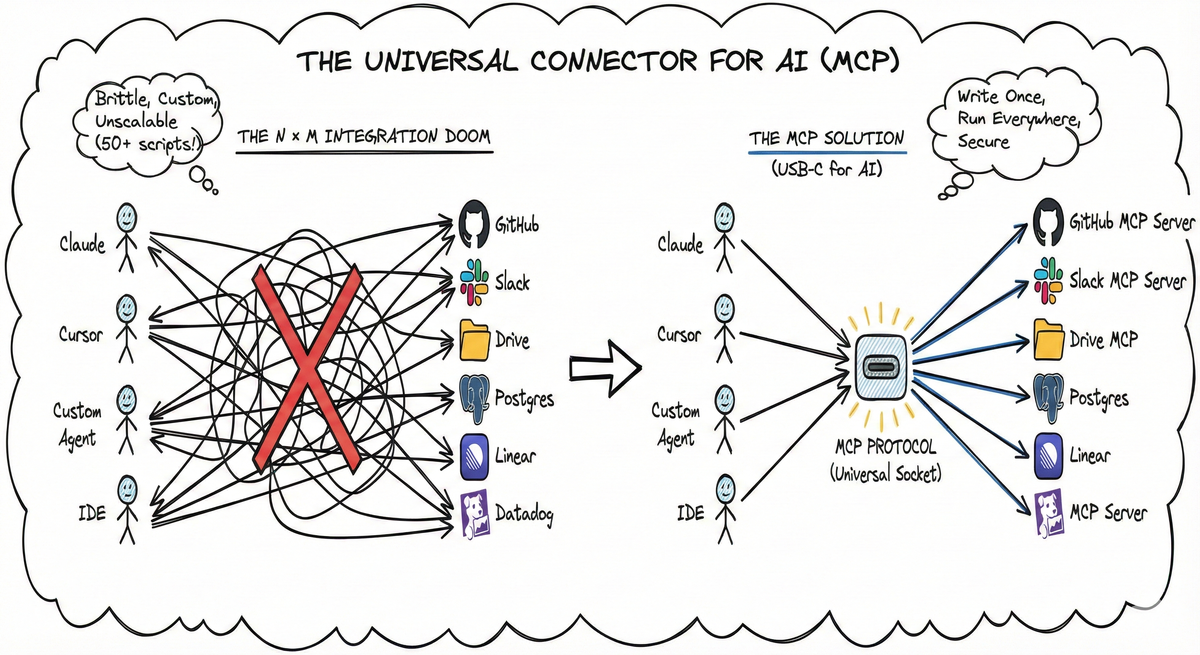

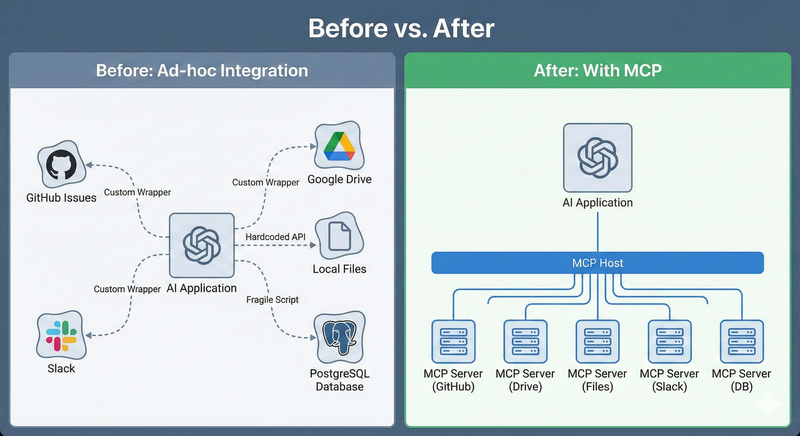

TL;DR: Building AI agents today feels like writing printer drivers in the 90s. We face an N × M integration problem where every AI app needs a custom connector for every data source. MCP (Model Context Protocol) solves this by acting as a universal open standard—effectively the USB-C for AI applications.

1. The “Smart” Model, “Stupid” Context Problem#

Imagine you have the smartest intern in the world (your LLM). They have read every book in the library. But if you ask them, “Why did our Q3 deployment fail?”, they hallucinate. Why? Because they don’t have your context. To fix this, developers have spent the last two years building Pipelines:

- Write a Python script to scrape GitHub issues.

- Write another script to fetch logs from Datadog.

- Format it all into a massive text blob.

- Paste it into the prompt. This works for a weekend prototype. It fails in production.

2. The N × M Matrix of Doom#

Here is the real problem. We don’t just have one AI (Claude); we have dozens (ChatGPT, Cursor, IDEs, Custom Agents). We don’t just have one data source; we have hundreds (Postgres, Slack, Linear, Google Drive). Without a standard, you have to build a custom integration for every single combination.

graph TD

subgraph "The Old Way (N x M Mess)"

A[Claude] --> G[GitHub]

A --> S[Slack]

A --> D[Drive]

B[Cursor] --> G

B --> S

C[Custom Agent] --> D

end

This is the N × M problem. If you have 5 AI clients and 10 tools, you need to maintain 50 different integration scripts. It is brittle, insecure, and unscalable.

3. The Solution: A Universal Socket (MCP)#

Enter the Model Context Protocol (MCP). Think of MCP as USB-C for AI.

- USB-C: You don’t buy a “Dell Mouse” or a “Mac Keyboard.” You just buy a USB mouse, and it works with any computer.

- MCP: You don’t write a “Claude GitHub Tool.” You write a generic MCP GitHub Server. Now Claude, Cursor, Zed, and your custom agent can all use it instantly.

graph TD subgraph "The MCP Way (1 x N)" Host1[Claude] --> Protocol((MCP Protocol)) Host2[IDE] --> Protocol Host3[Agent] --> Protocol end Protocol --> Server1[GitHub Server] Protocol --> Server2[Postgres Server] Protocol --> Server3[Slack Server] style Protocol fill:#f96,stroke:#333,stroke-width:4px

4. Why This Changes Everything#

- Write Once, Run Everywhere: Build a tool to query your internal SQL database once. It now works in your IDE, your chat bot, and your automated workflows.

- Data stays where it belongs: Instead of ETL-ing your data into a Vector DB, MCP lets the AI fetch data on demand from the source of truth.

- Security by Design: With a standard protocol, you can bake in authentication, authorization, and auditing at the MCP server level.

- Ecosystem Growth: Just like USB-C led to a boom in peripherals, MCP will enable a thriving ecosystem of AI tools and integrations.

5. Next Steps#

In the next part, we’ll dive into the technical details of the MCP specification—how the protocol works, its core capabilities, and how you can start building MCP-compliant tools today. Stay tuned!